All warfare is based on deception. Hence, when able to attack, we must seem unable; when using our forces, we must seem inactive; when we are near, we must make the enemy believe we are far away; when far away, we must make him believe we are near. Sun Tzu

In our previous post I provided background around the industry’s concept of threat hunting as it stands nowadays. What I would like to do in this second part is to delve a bit deeper into one of the active defence tactics mentioned earlier, namely, controlled attack paths and what this means in the context of cyber deception.

Cyber Deception: The Art of Shaping Threat Actor Behaviour

Ever heard of controlled attack paths? Probably not in the context of cyber. Google itself offers little to no information about it:

Controlled Attack Paths is the term I use to refer to Cyber Deception as the art of intentionally shaping threat actor behaviour.

I know what you are thinking, how can you shape an attacker’s behaviour? isn’t it more likely that attacker behaviour will shape your defensive stance? isn’t it more likely that the attackers will find your weaknesses before you do? Well, as with most complex things: yes and no. What if I tell you that most organizations are already shaping threat actor’s behaviour, in one way or another?

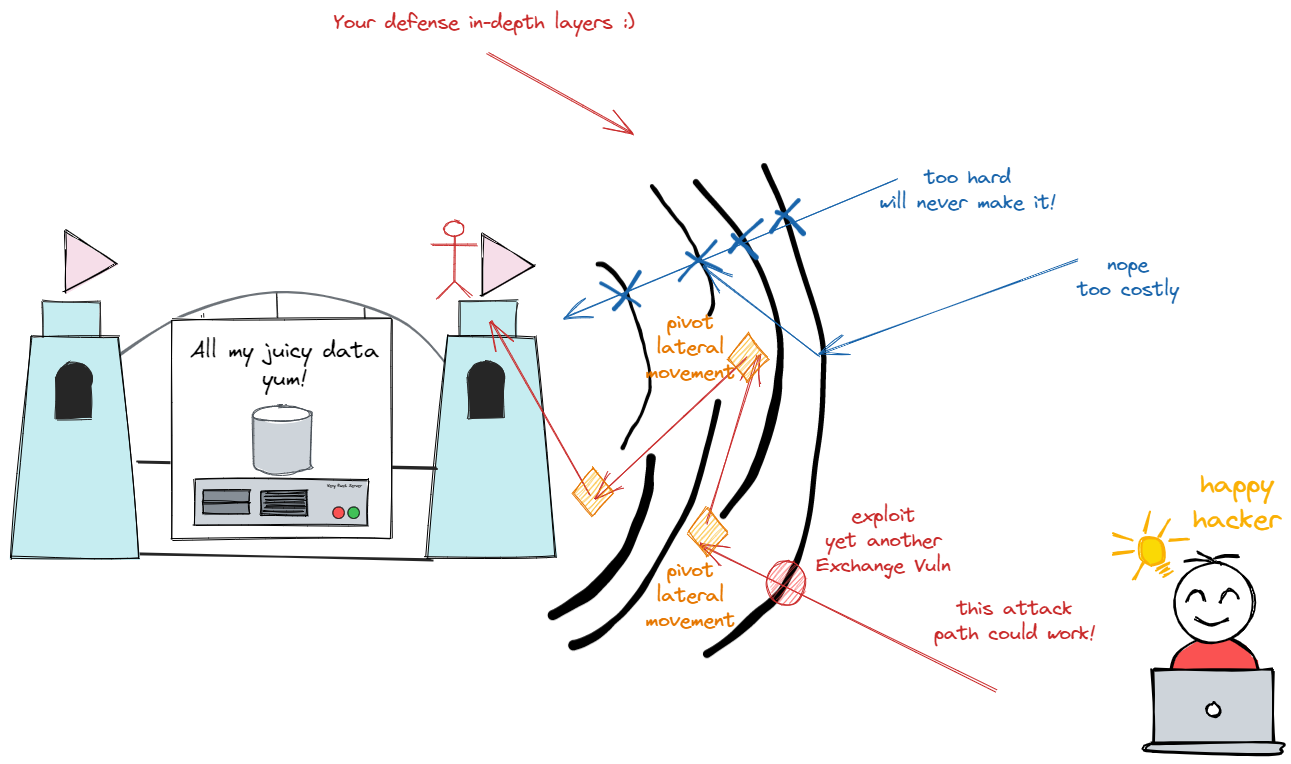

The traditional approach that defensive operations adopt in cyber war is to deploy protective, detective and responsive controls. This multi-layered approach, with each layer also consisting of more layers, is what’s called defence in depth.

When threat actors attempt to breach your defensive controls, they must dribble, circumvent, exploit or bypass your layered defences. As they do this, they are obstructed by certain security controls, in the best of cases. In many instances though their tactics are only hindered and delayed, which is also a win for defenders, since anything that buys us time is crucial to effective defence.

As attackers try to compromise our defenses though, they leave traces behind: their actions generate ripples in our interconnected systems (remember SANS “malware can hide, but it must run”?). It is the task of the defenders to pick up these weak signals, amplify them, and respond to them.

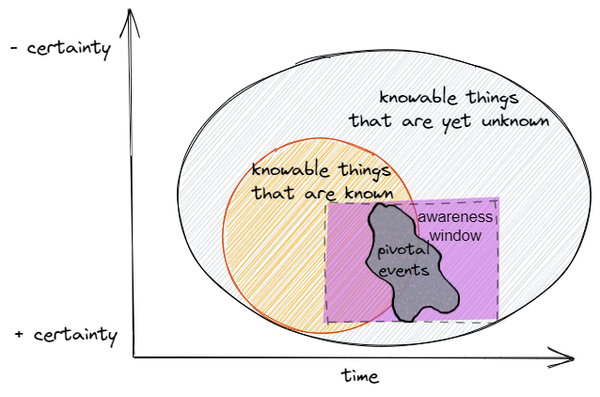

However, most importantly, the unique way in which our defences are layered and interconnected creates a unique cyber-eco-system that influences attacker behaviour. No two environments are the same. Unknowingly to most cyber defenders, your defence-in-depth specific configuration shapes attacker behaviour. It shapes it in such a way that some attack paths are preferred to others, which means some TTPs will be more suitable to penetrate your infrastructure. It shapes it in a way that an attacker choosing a particular attack chain will generate a distinctive fingerprint in your environment.

Passively so, you are already influencing attacker behaviour by the very exclusive way in which your network is constructed.

Controlled Attack Paths

Going back to our earlier question though, even when we assert some level of influence over an attacker’s behaviour, isn’t it more likely that the attackers will find your weaknesses before you do?

The short answer is: I hope so, as long as that weakness was intentionally planted by us to act as such!

Malicious adversaries display different levels of persistence, depending on motivation, resources and goals. Under regular conditions, it will take a more sophisticated and resourceful attacker to circumvent the defensive system of a well-protected company. Eventually though, with enough patience and the right context (e.g. new zero-day discovered, hampered defensive posture after introducing a massive change in company infra, etc.), an attacker might find its way into your network and precious data.

If we assume that a breach will eventually happen, why shouldn’t we dedicate our efforts to crafting specific weak spots (web services, AD accounts) that are safe to deploy, acting as decoys, enticing adversaries to follow a path that seems likely and presents a good ROI?

Controlled Attack Paths are precisely that: intentionally crafted paths that are planted with a purpose

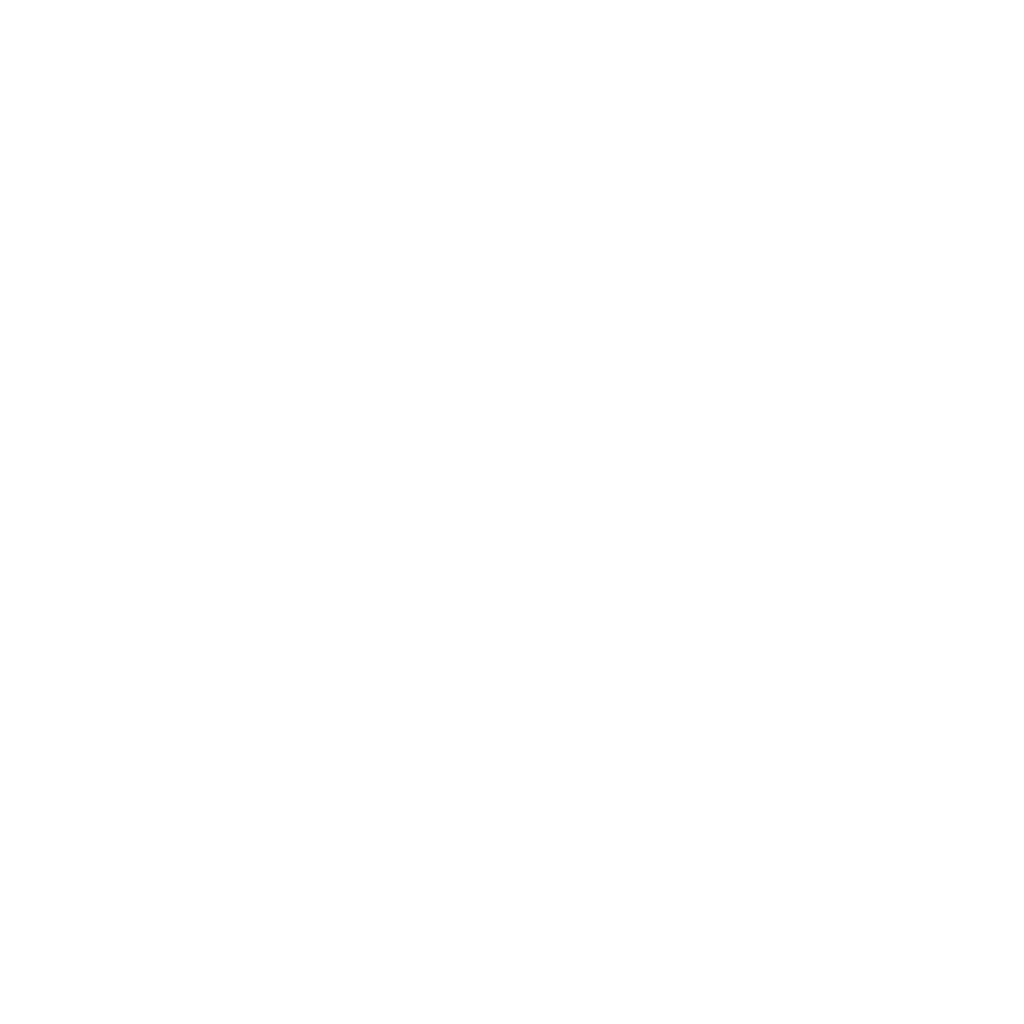

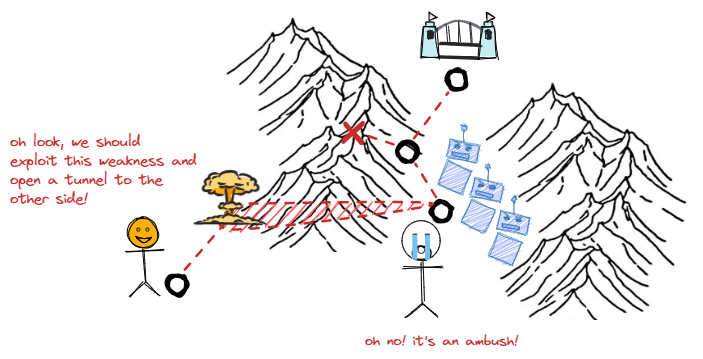

What does this mean for threat hunting then? Well, if you think of how tribe hunters in the physical world “hunt”, they don’t just actively chase after their food with spears, bows and arrows. They are usually cleverer than this and have a deep knowledge of their terrain. This allows them to set bait, lures and distractions in order to:

a. capture their prey with previously deployed traps

b. lead the target to an ambush (where we can again use the arrows and spears)

In both cases, an experienced hunting or scouting party will influence the target’s behaviour in such a way that it ends up following the attack path controlled by the party. This in turn requires landscape knowledge. But most of all, it requires a shift in mindset, from thinking in terms of sequential lists to dynamic graphs! There are many posts written about this, but I find @JohnLaTwC defender mindset very insightful where John states:

“Your network is a directed graph of credentials. Hacking is graph traversal. See the graph or all you’ll see is exfil."

The Three Tactical Disadvantages of Defensive Ops

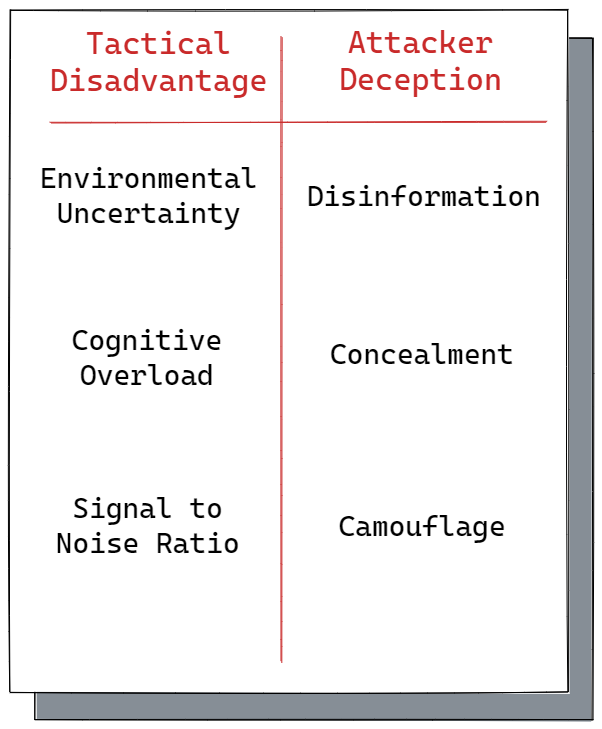

I know what you guys are thinking: the defender’s dilemma. This dilemma goes roughly like this: breaches are inevitable because defenders must be right 100% of the time whereas attackers only have to be right once. This thought pattern suggests that we can’t protect an organization because we are at a disadvantage. This asymmetry can be expressed in what I call the three tactical disadvantages of cyber defence:

- Environmental Uncertainty: generally, we don’t know where a successful attack is going to come from. Even when we can sometimes assume what are the top 3 entry vectors in our industry (e.g. phishing, drive-by downloads, etc.), we cannot be sure how that attack will manifest and what will be patient zero or the initial breach point. Environmental uncertainty refers to the defender’s inability to absorb the continuous infrastructural, data, application and contextual changes that are pervasive in any modern organization.

- Cognitive Overload: attackers count with one very important and implicit advantage; they are not the only ones probing and trying to compromise an organization. There are hundreds and even thousands of reconnaissance and attack attempts per day on any given company, alerts generated by these attempts from attackers around the world are triaged by extenuated security teams day in, and day out. This interesting phenomenon takes the shape of an inherent DDoS attack to our limited cognitive functions which makes it hard to spot the one True Positive, in a sea of False Positives. This means that attackers can effortlessly multiply their strength by just riding the energy wave of relentless global attacks directed at any given organization. Alert Fatigue anyone?

- Signal to Noise Ratio: if the defender’s task wasn’t already hard, it is also difficult to get the SNR balance right. Defenders might be consuming high amounts of useless telemetry which leads to technical debt when alerts are based on erroneous or incomplete data.

When you start thinking about security in terms of cyber warfare and overall energy vectors in complex interactions, you will start to realize that, whether you like it or not, cyber adversaries are already employing deception techniques. Perhaps unknowingly even to them, they are already enacting some form of behaviour-shaping force against defenders, by exploiting the three tactical disadvantages mentioned above. Our cyber adversaries are already taking advantage of three types of deception with high return and little effort: disinformation, concealment and camouflage. Each of these matches up with one of our tactical defensive weaknesses:

- Disinformation: cyber attackers can exploit the lack of understanding we have of our own digital landscape. It is hard to keep up with the complex environments that make up our digital infrastructure. They count on it, they count on poorly staffed detection and response teams, on complex environments with poor CMDB hygiene (I mean, CMDB is boring, what can you do!) and even worse understanding of the security controls deployed in the business (if you have a single pane of glass showing all of the security controls deployed in your business: I applaud you!). So, passively, without having to invest effort, threat actors are already tapping into this deception tactic.

- Concealment: it is very easy for attackers to go unnoticed in the myriad of phishing attempts, reconnaissance, probing and exploit attempts that hit any given business constantly. Concealment exploits the fact that no matter how good your detection and response capabilities are, you will eventually miss something. Our cognitive awareness can only bring us so far. The good thing is that, to a degree, this also applies to threat actors: everybody needs to sleep, everybody gets tired, and resources are limited.

- Camouflage: in the sea of telemetry we collect from all of our systems, it is not hard to blend with the noise. And if you are part of the noise, you can’t be part of the signal! Cyber research is also pushing the limits of our knowledge all the time, finding new things. What previously was considered noise, can quickly become a quality signal!

Engaging the Adversary

Instead of opposing force by force, one should complete an opposing movement by accepting the flow of energy from it and defeat it by borrowing from it. This is the law of adaptation

Bruce Lee

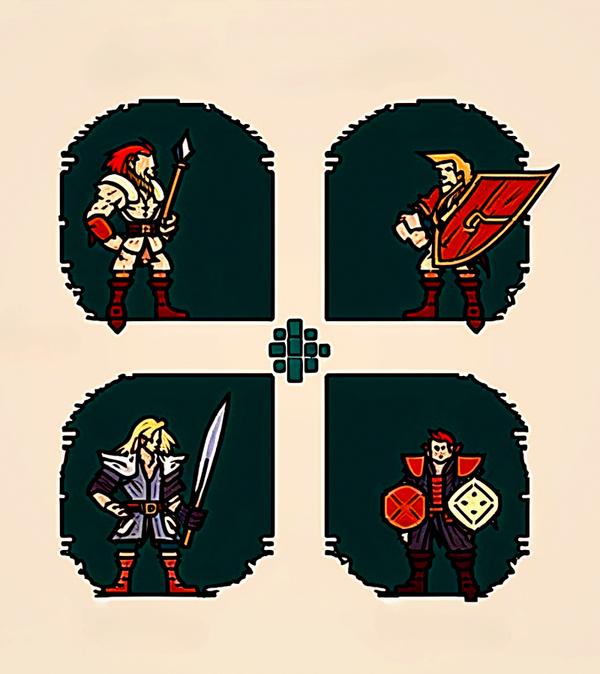

If you recall our little diagram from Part 1, I explained how, by general industry standards, Threat Hunting seems to be reduced to inhabit the world of detection. Detection in this way is conceived in terms of standard SOC operations: obtain telemetry from the network, centralize that telemetry in a SIEM or similar, develop detection logics, monitor for alerts, and respond to alerts. Analysts are responsible for monitoring networks and systems to detect any potential threats or attacks. The only difference here is that Threat Hunting would actively run detection queries to interrogate the environment, instead of waiting for a detection logic to be deployed and alerted on.

Now here’s the thing, it’s not detection per se that limits the concept of operations for Threat Hunting, it’s the approach to detection that acts as a limiting factor. The idea of a reactive detection paradigm is the foundation for all the technical solutions that we have grown reliant upon.

Reactive detection is based on the premise that it suffices to deploy sensors in our internal landscape to identify intruder activity, since this activity would technically look anomalous. The problem with this approach is that it cannot escape the gravitational effects of the three tactical disadvantages of cyber defence ops.

Reactive detection is NOT in any way a pejorative term. It is an absolute foundational requirement for anyone who wants to do security seriously! Reactive detection is more a phase of detection and response than an actual function attached to a team. It represents a moment in the overall response chain.

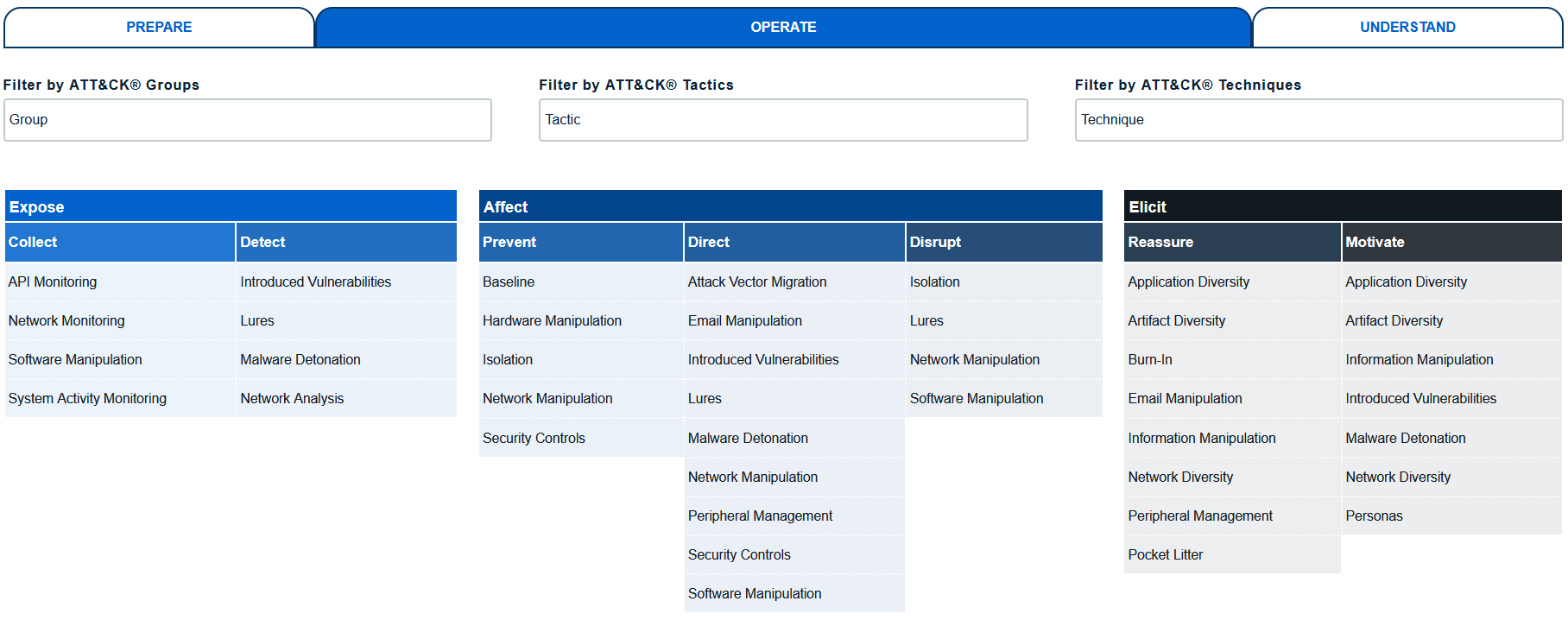

The problem with limiting hunting to this approach is that it doesn’t consider the engagement aspect of cyber defence. Wait what? “engaging the adversary”? are you talking about “hacking back”? Not at all my friend. Hacking back is too obvious, and dangerous for organizations. There are more subtle ways to engage the cyber threat actors. Everybody knows about MITRE ATT&CK, but have you ever heard of MITRE ENGAGE?

The MITRE ENGAGE project is designed to help organizations identify and defend against cyber adversaries. It provides a framework for engaging cyber adversaries by developing and executing cyber deception campaigns, which are designed to deceive adversaries and disrupt their activities.

As defenders, we tend not to engage with the adversary unless they are inside our network, with the sole purpose of evicting them. It makes sense, as defenders we are always trying to prevent the initial intrusion.

We are not inclined to let things go on and see how things play out. By doing this, we are effective (hopefully) at preventing attacks and mitigating damage, but the flip side is that we don’t allow ourselves the possibility to learn from the adversary, beyond their initial steps. ENGAGE provides a means to approach the opportunity to learn from the adversary in a safe way.

The first iteration of MITRE ENGAGE was called SHIELD, and you can check out the release paper here. MITRE ENGAGE provides a great framework and armoury of concepts to start exploring the topic of how to shift the balance of power in favour of defenders by applying the strengths of the adversary against themselves!

Yada, Yada, Yada

“Cool Diego, these all sound nice-ish, but, do we really need deception?"

Well, that depends on your goals :) What do you really want to achieve in your organization? what are your cyber defence goals?

I found an interesting article in a Canadian blog where they reference a NATO paper published in 2014 that already talks about the need for “cyber deception”:

NATO Best Practices in Computer Network Defence, published in 2014 and co-authored by a number of Canadian representatives, re-enforced the need for cyber deception, forward-deployed intelligence collection and active defence. The Tallinn Manual International Law Cyber Warfare (Rule 61 – Ruses) permits cyber deception operations during both war and peace as an effective means of defence.

Reports like Cyber Deception Reduces Breach Costs & Increases SOC Efficiency (published in 2020) from deception technology vendor Attivo Networks have estimated that organizations utilizing cyber deception reduced data breach-related costs by 51%, and SOC Inefficiencies by 32%, compared to those not deploying deception technology.

In Part 3, we will explore these concepts further when I introduce a framework for adversarial cyber defence operations.

Until then, I hope you are finding these blog posts useful!

- I have no relationship with Attive Networks and they are not sponsoring any of this content, it just happened to be an article supporting the need for cyber deception, and of course they are a vendor so… take it with a pinch of salt